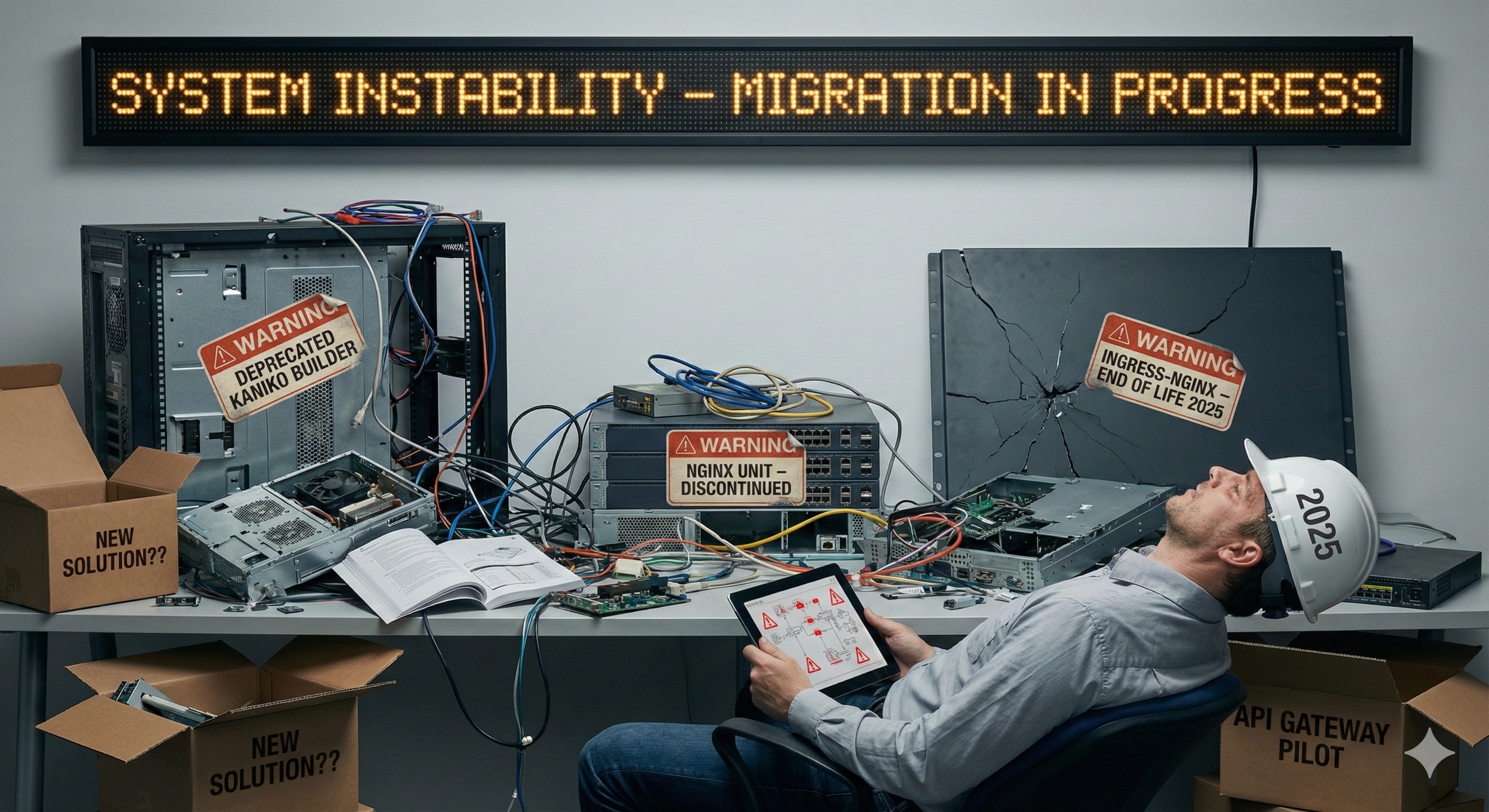

The more operator tools you use, the more time you will spend replacing them after deprecation. Your processes might be well-optimized, but a chain of deprecations can cause you to spend time solving problems you have already solved before, and now you have to make changes that could make your system less stable than before.

The year 2025 was a year of deprecation:

Kaniko was deprecated (link). Our team spent quite some time finding a solution with similar performance to avoid increasing pipeline build times.

NGINX Unit (link) was discontinued. Similarly, we had to find an application server that could handle high loads without slowing down.

Ingress-NGINX (link) was discontinued—the most impactful. The options were either to migrate to another solution or start using an API gateway.

Finding an “ideal” solution that fits your current needs doesn’t guarantee stability in the long term. One day, you might have to migrate to something new, introducing potential instability to your system.